Fargate Pods on EKS and Pull Public Images Through ECR Cache

I has long been considering to include EKS Fargate into my blog site. Last weekend, there were several releases taken place, among which is enabling EKS Fargate for a micro-service.

EKS and Fargate make it straightforward to run Kubernetes-based applications on AWS by removing the need to provision and manage infrastructure for Pods.

AWS Fargate is a technology that provides on-demand, right-sized compute capacity for containers. With AWS Fargate, you don't have to provision, configure, or scale groups of virtual machines on your own to run containers. You also don't need to choose server types, decide when to scale your node groups, or optimize cluster packing. You can control which pods start on Fargate and how they run with Fargate profiles. Fargate profiles are defined as part of your Amazon EKS cluster.

Amazon EKS integrates Kubernetes with AWS Fargate by using controllers that are built by AWS using the upstream, extensible model provided by Kubernetes. These controllers run as part of the Amazon EKS managed Kubernetes control plane and are responsible for scheduling native Kubernetes pods onto Fargate. The Fargate controllers include a new scheduler that runs alongside the default Kubernetes scheduler in addition to several mutating and validating admission controllers. When you start a pod that meets the criteria for running on Fargate, the Fargate controllers that are running in the cluster recognize, update, and schedule the pod onto Fargate.

Together with this batch of releases, the container runtime of EKS has been moved to containerd in respond to the ending support of Dockershim since Kubernetes 1.24. Also, it is announced by AWS that, the default runtime will be changed from Docker to containerd starting from EKS 1.23. Changing this runtime is quite easy, all you need to do is to specify the "--container-runtime containerd" option in the bootstrap script line in the userdata.

I also tried the ECR pull through cache featue, to obtain ECR public images from within the private EKS cluster.

In this blog post, I will demonstrate how to configure EKS Fargate by deploying a sample Kubernetes workload on an EKS cluster running Kubernetes Pods on Fargate. At the step of retrieving an ECR image, I will demonstrate how to use ECR pull through cache feature to provide container image to the Fargate Pod.

Create a Fargate Pod Execution Role

When EKS cluster creates Fargate Pods, the components that run on the Fargate infrastructure must make calls to AWS APIs on your behalf. The EKS Pod execution role provides the IAM permissions to do this.

vim pod-execution-role-trust-policy.json

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Condition": {

"ArnLike": {

"aws:SourceArn": "arn:aws:eks:us-west-2:111122223333:fargateprofile/blog/*"

}

},

"Principal": {

"Service": "eks-fargate-pods.amazonaws.com"

},

"Action": "sts:AssumeRole"

}

]

}

Create a Pod execution IAM role.

aws iam create-role \

--role-name AmazonEKSFargatePodExecutionRole \

--assume-role-policy-document file://"pod-execution-role-trust-policy.json"

{

"Role": {

"Path": "/",

"RoleName": "AmazonEKSFargatePodExecutionRole",

"RoleId": "AROARZX5OWTOFEJEKBO6Y",

"Arn": "arn:aws:iam::111122223333:role/AmazonEKSFargatePodExecutionRole",

"CreateDate": "2022-08-07T13:52:40+00:00",

"AssumeRolePolicyDocument": {

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Condition": {

"ArnLike": {

"aws:SourceArn": "arn:aws:eks:us-west-2:111122223333:fargateprofile/blog/*"

}

},

"Principal": {

"Service": "eks-fargate-pods.amazonaws.com"

},

"Action": "sts:AssumeRole"

}

]

}

}

}

Attach the required EKS managed IAM policy to the role.

aws iam attach-role-policy \

--policy-arn arn:aws:iam::aws:policy/AmazonEKSFargatePodExecutionRolePolicy \

--role-name AmazonEKSFargatePodExecutionRole

Create a Fargate profile for your cluster

Before you can schedule Pods running on Fargate in EKS cluster, you must define a Fargate profile that specifies which Pods should use Fargate when they are launched.

The Fargate profile allows an administrator to declare which Pods run on Fargate. This declaration is done through the profile’s selectors. Each profile can have up to five selectors that contain a namespace and optional labels. You must define a namespace for every selector. The label field consists of multiple optional key-value pairs. Pods that match a selector (by matching a namespace for the selector and all of the labels specified in the selector) are scheduled on Fargate. If a namespace selector is defined without any labels, Amazon EKS attempts to schedule all pods that run in that namespace onto Fargate using the profile. If a to-be-scheduled pod matches any of the selectors in the Fargate profile, then that pod is scheduled on Fargate.

Create a file that defines the EKS Fargate profile.

vim demo-kube-system-profile.json

{

"fargateProfileName": "demo-fargate",

"clusterName": "blog",

"podExecutionRoleArn": "arn:aws:iam::111122223333:role/AmazonEKSFargatePodExecutionRole",

"subnets": [

"subnet-01xxx50",

"subnet-0cxxx3d"

],

"selectors": [

{

"namespace": "fargate"

}

]

}

aws eks create-fargate-profile --cli-input-json file://demo-kube-system-profile.json

{

"fargateProfile": {

"fargateProfileName": "demo-fargate",

"fargateProfileArn": "arn:aws:eks:us-west-2:111122223333:fargateprofile/blog/demo-fargate/90c13c56-7a71-3c9f-f50c-165984bd60e0",

"clusterName": "blog",

"createdAt": "2022-08-07T22:19:38.181000+08:00",

"podExecutionRoleArn": "arn:aws:iam::111122223333:role/AmazonEKSFargatePodExecutionRole",

"subnets": [

"subnet-0cxxx3d",

"subnet-01xxx50"

],

"selectors": [

{

"namespace": "fargate"

}

],

"status": "CREATING",

"tags": {}

}

}

For this demonstration, the Fargate Pod should be deployed separetely without bothering existing workloads, thus I will create a new namespace.

kubectl create ns fargate

namespace/fargate created

Prepare Container Image Retrieval Mechanism

Now comes to the Pod deployment part. But hold on a second, the workload is running in a private network zone, and we need to elegantly retrieve the container image that is hosted in a public repository, say, ECR public gallery. Is that feasible?

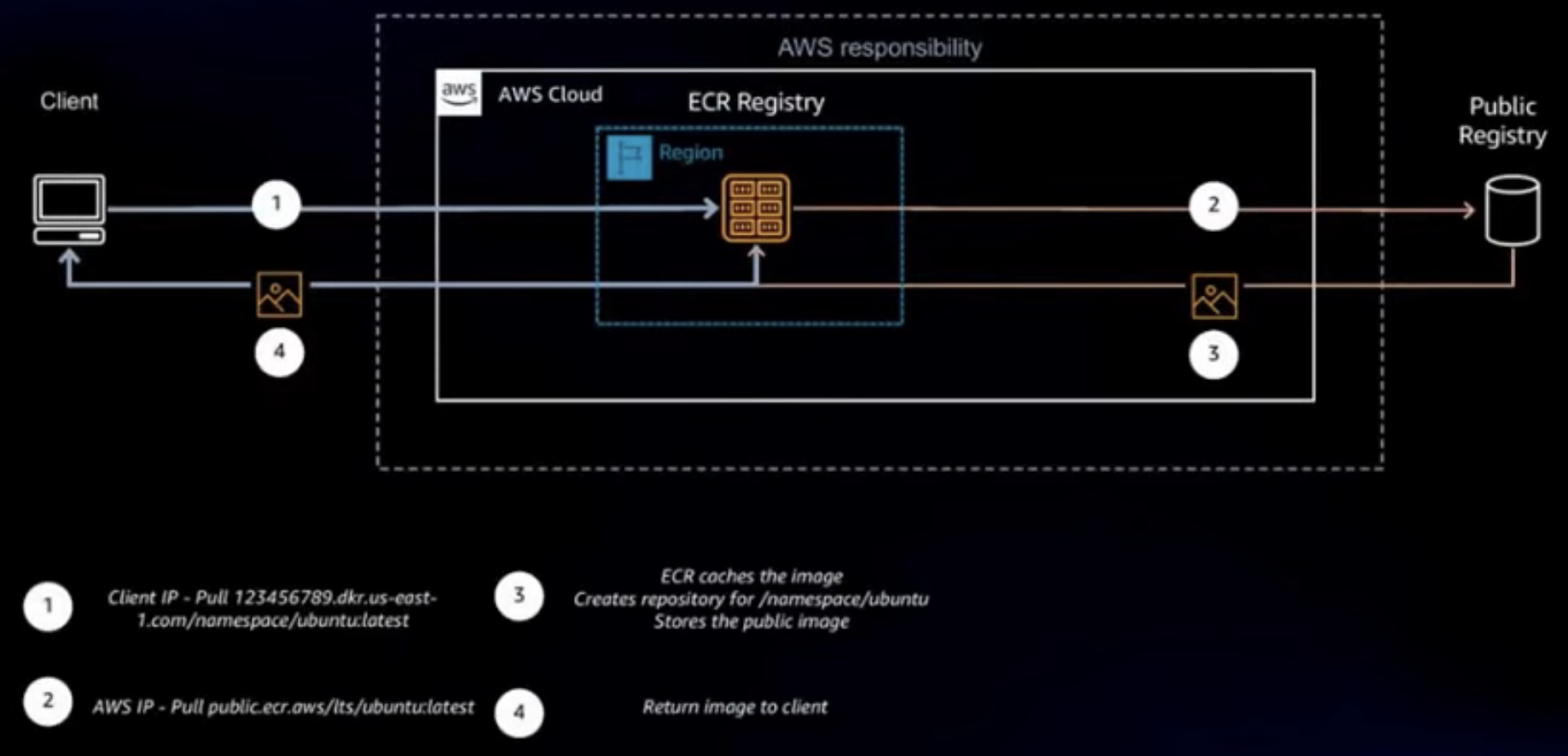

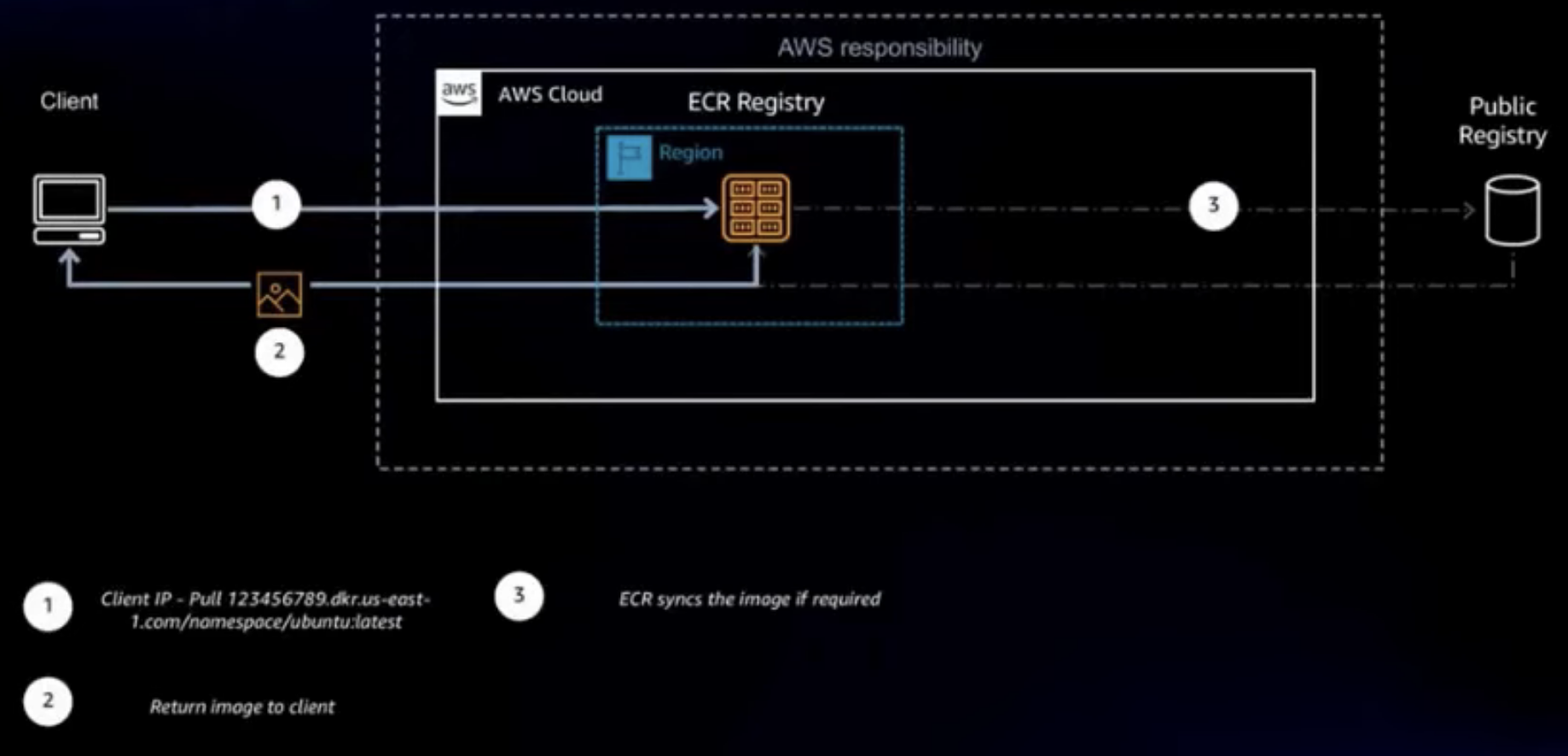

Well, ECR has a feature called pull through cache repositories, which offers improved performance, security, and availability of ECR for container images that sourced from public registries. Images in pull through cache repositories are automatically kept in sync with the upstream public registries, thereby eliminating the manual work of pulling images and periodically updating.

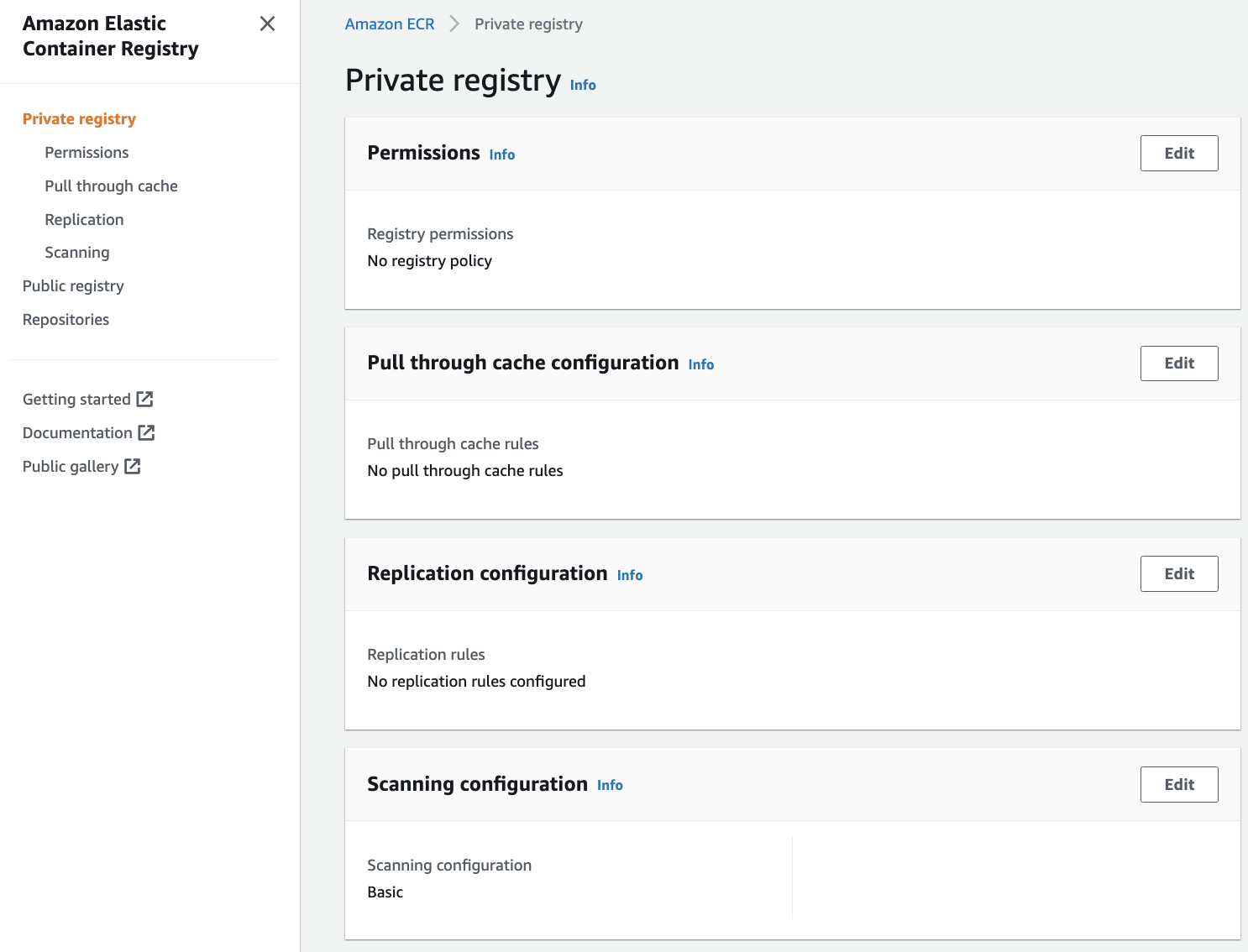

To leverage this feature, first modify the private registry's settings to add a rule that references the upstream, publicly accessible registry. In the ECR console, select Private registry, then select Edit in the Pull through cache configuration panel to change settings.

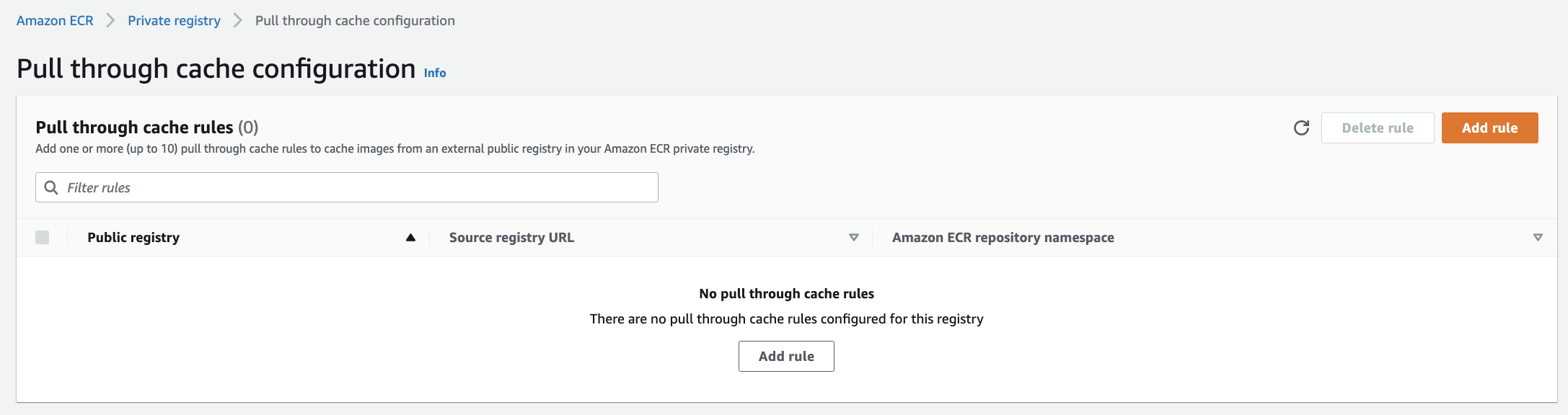

This takes me to the Pull through cache configuration page.

Select Add rule.

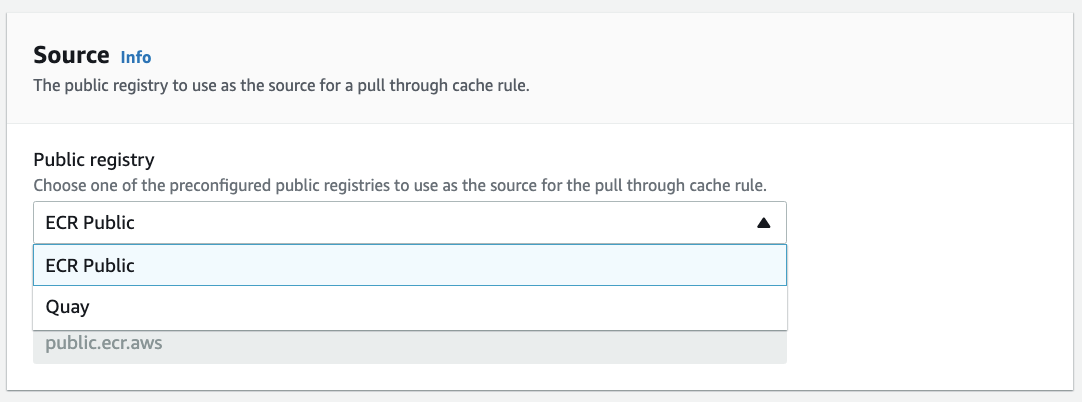

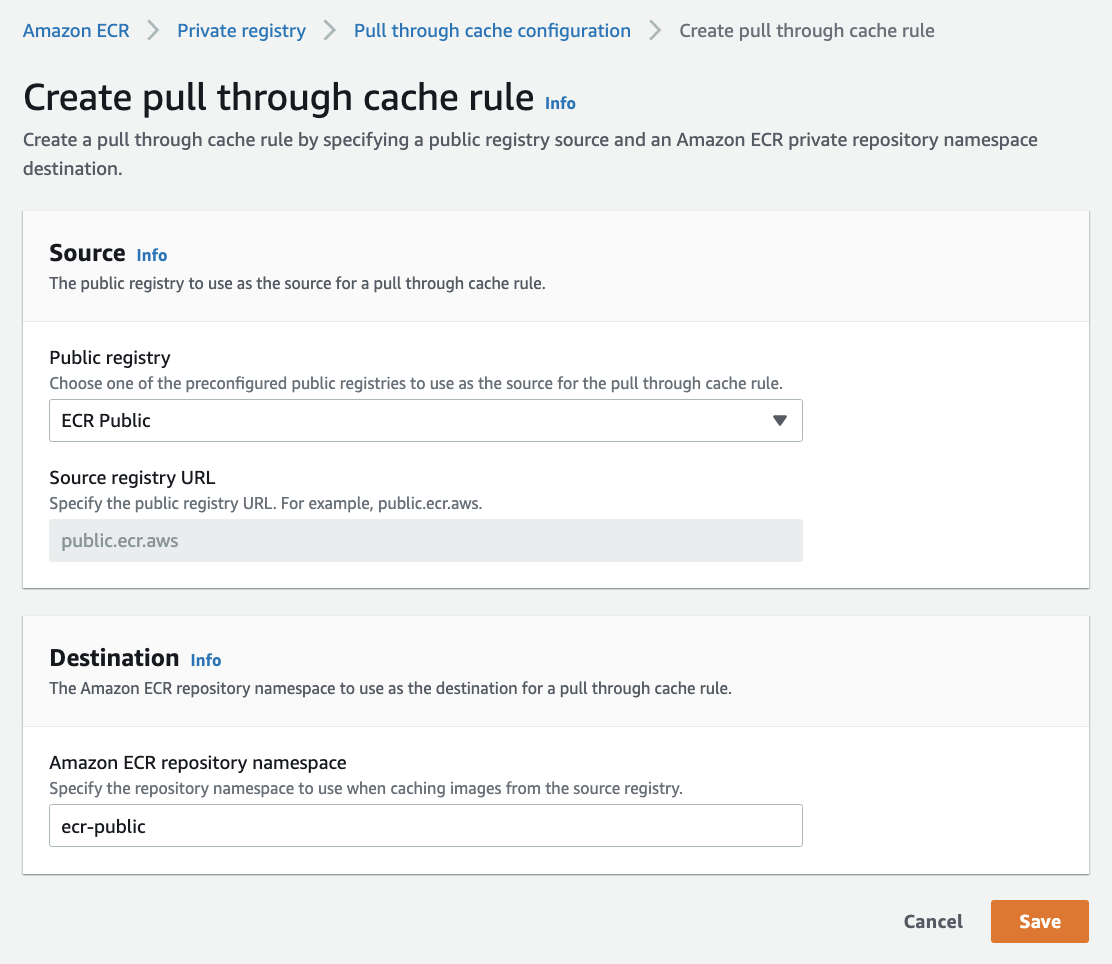

On the Create pull through cache rule page, choose the upstream registry. Choose ECR Public, because in this example I will be demonstrate how to retrieve the Nginx container image from ECR public gallery.

Set a namespace, which will be used when referring to images in the docker pull commands. Here, I used ecr-public.

Select Save.

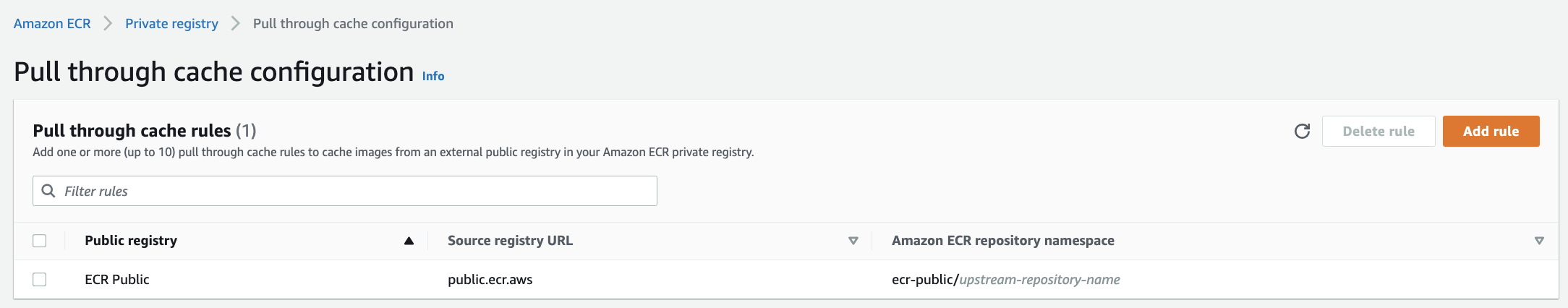

This takes me back to the Pull through cache configuration page where my newly configured rule is listed.

Now, I'm ready to utilize the cache repository when pulling images.

To reference an image, specify the namespace that I chose in the pull URL, using the URL format <accountId>.dkr.ecr.<region>.amazonaws.com/<namespace>/<sourcerepo>:<tag>. When images are pulled, the cache repository associated with the namespace is checked for the image.

In this case, the cache repository doesn't exist yet, and we don't need to create it manually. The image is fetched from the upstream repository in the public registry associated with the namespace, and then stored in a new cache repository that is created automatically.

First, authenticate with the registry.

aws ecr get-login-password --region us-west-2 | docker login --username AWS --password-stdin 111122223333.dkr.ecr.us-west-2.amazonaws.com/ecr-public

Login SucceededReplace the "111122223333" with your AWS account ID.

Replace the "us-west-2" with the AWS region you are using.

Pull the Nginx image from ECR Public into the cache.

docker pull 111122223333.dkr.ecr.us-west-2.amazonaws.com/ecr-public/nginx/nginx:stable

stable: Pulling from ecr-public/nginx/nginx 1efc276f4ff9: Pull complete f083c3ec9303: Pull complete 4670ba2d31b0: Pull complete 883d1b430e78: Pull complete 80453319cc3e: Pull complete 75efce6a4674: Pull complete Digest: sha256:d45e9b5b60373b9ac05510eea59e3109940d12c334c4a12ddc60b2b84913760f Status: Downloaded newer image for 111122223333.dkr.ecr.us-west-2.amazonaws.com/ecr-public/nginx/nginx:stable 111122223333.dkr.ecr.us-west-2.amazonaws.com/ecr-public/nginx/nginx:stable

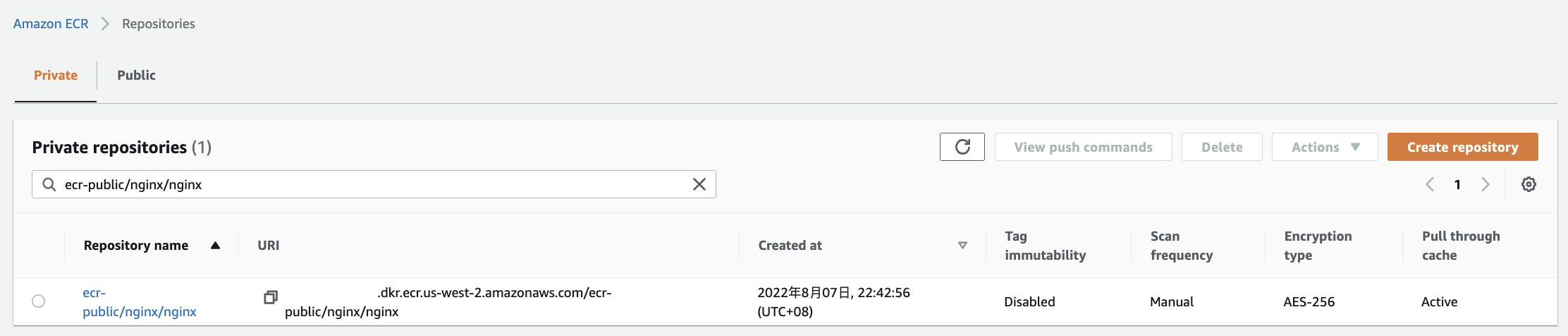

In the ECR console, the Private repositories page shows that a new private repository has been created containing the image just pulled, together with an indication that "Pull through cache" is active.

Deploy a Pod to the EKS cluster

kubectl create deployment demo-app --image=111122223333.dkr.ecr.us-west-2.amazonaws.com/ecr-public/nginx/nginx:stable -n fargate

deployment.apps/demo-app created

Check the state of my Pods.

kubectl get pods -n fargate

NAME READY STATUS RESTARTS AGE demo-app-7786c6655-9p2fr 0/1 Pending 0 77s

The node's name is prefixed with "fargate-", representing the compute resources that have instantiated to run the Fargate Pods.

kubectl get nodes

NAME STATUS ROLES AGE VERSION fargate-ip-10-0-xxx.us-west-2.compute.internal Ready <none> 25s v1.22.6-eks-14c7a48 ip-10-0-xxx.us-west-2.compute.internal Ready <none> 2d2h v1.22.9-eks-810597c ip-10-0-xxx.us-west-2.compute.internal Ready <none> 94m v1.22.9-eks-810597c ip-10-0-xxx.us-west-2.compute.internal Ready <none> 12m v1.22.9-eks-810597c ip-10-0-xxx.us-west-2.compute.internal Ready <none> 107m v1.22.9-eks-810597c

After a short time, re-run the kubectl get pods command, and the Pod now has a status of "Running", meaning the Pod has been successfully deployed onto EKS Fargate.

kubectl get pods -n fargate

NAME READY STATUS RESTARTS AGE demo-app-7786c6655-9p2fr 1/1 Running 0 105s

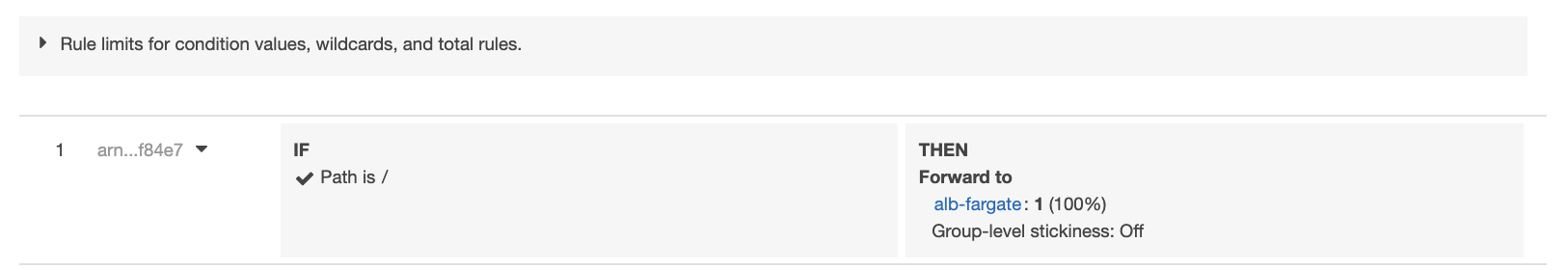

Final Step - Configure ALB

Configure target group and ALB listener to forward the interested traffic to the Fargate Pod.

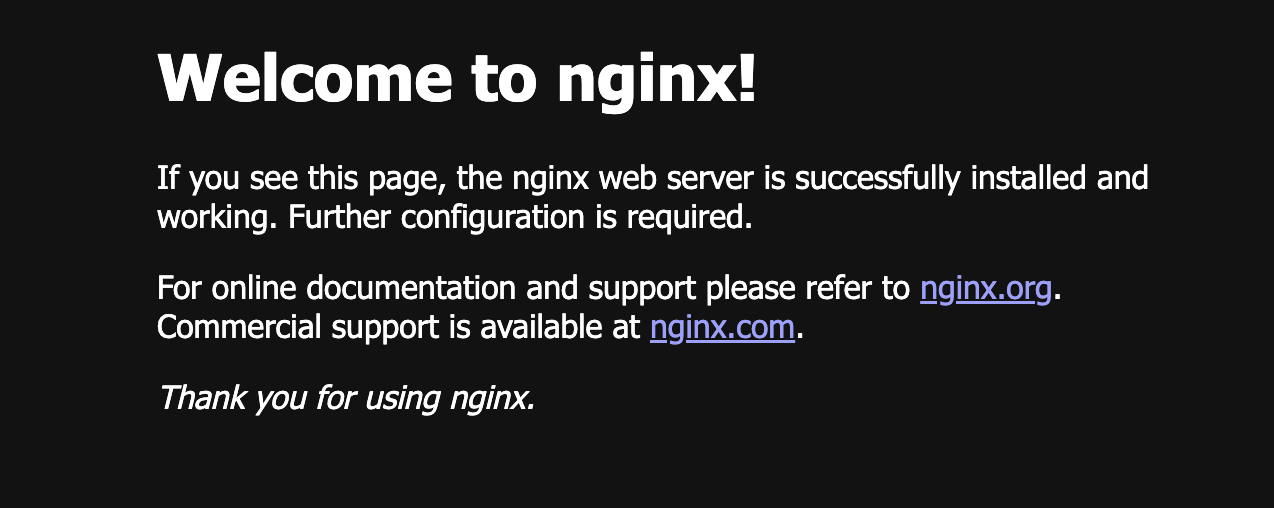

Access the URL via browser, it shows the Nginx default homepage.

Cleanup

kubectl delete deploy -n fargate demo-app

deployment.apps "demo-app" deleted

kubectl get nodes

NAME STATUS ROLES AGE VERSION ip-10-0-xxx.us-west-2.compute.internal Ready <none> 3d12h v1.22.9-eks-810597c ip-10-0-xxx.us-west-2.compute.internal Ready <none> 35h v1.22.9-eks-810597c ip-10-0-xxx.us-west-2.compute.internal Ready <none> 34h v1.22.9-eks-810597c ip-10-0-xxx.us-west-2.compute.internal Ready <none> 35h v1.22.9-eks-810597c

References

Amazon EKS pod execution IAM role

Announcing Pull Through Cache Repositories for Amazon Elastic Container Registry

Amazon EKS now supports Kubernetes 1.21

Amazon EKS on AWS Fargate Now Generally Available

Amazon EKS is ending support for Dockershim

-